Introduction

This paper was inspired by the book “CONTENT-BASED ANALYSIS OF DIGITAL VIDEO” by Alan Hanjalic, 2004.

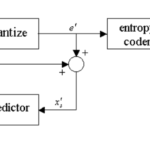

Reliable detection of scene cuts and gradual transitions (fades, wipes, dissolves) can improve coding efficiency (e.g. in case of a scene cut the next frame is set to I-frame). In addition, video index or scene index can be supplied to user.

It’s worth considering to apply low-pass filtering to each frame prior to computing frame differences or block statistics to improve robustness.

Sensing of video shots (scene cuts) and gradual transitions is based on discontinuities of model parameters in frames around the scene change.

Statistics based on SAD between successive frames are unreliable due to noise and global motions.

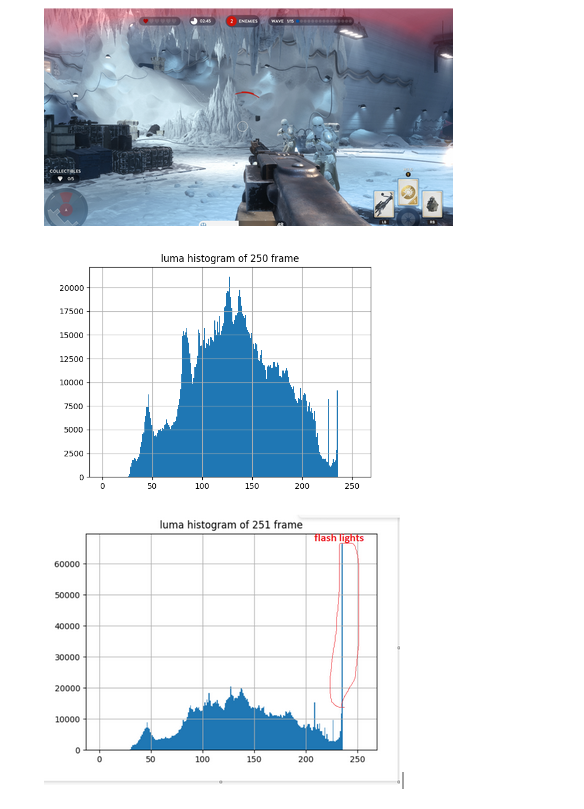

i suggest exploiting the pixel-histogram statistics to detect video shots (scene cuts) and gradual transitions.

Method

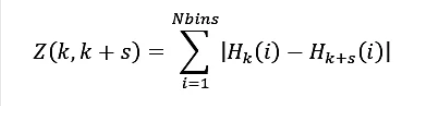

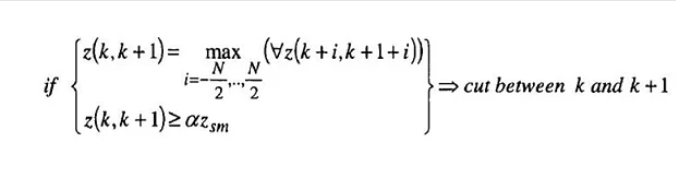

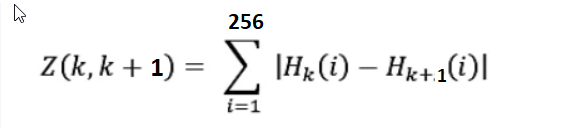

Let’s specify the discontinuity factor Z(k,k+s) between k-th and (k+s)-th frames:

Note: It’s commonly to normalize Z(k,k+s) by dividing the number of pixels in frame. Thus, the score Z is resolution-invariant.

Where Hk(i) is the i-th bin of the grey-value histogram belonging to the frame k.

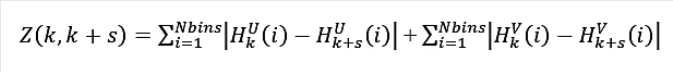

Instead of grey-value histogram one can use other popular metric – color histogram over U and V pixel values:

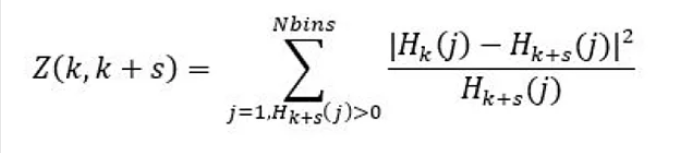

Also popular is chi-square histogram metric:

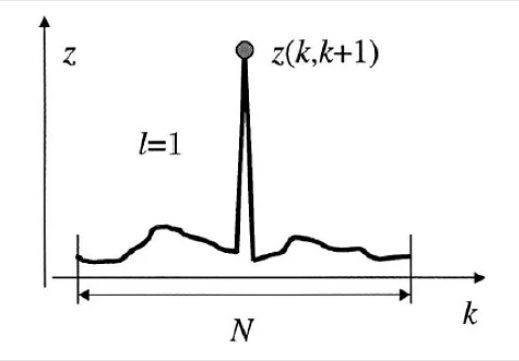

General graph of Z(k) in case of a video shot is illustrated below (the presence of an isolated sharp peak surrounded by low discontinuity values may be seen as a reliable indication for the presence of a scene-cut):

Where N is sliding window length (in frames) chosen such that no two video shots happened within a window.

For each frame k at the middle of the N-frames window if the following condition is met then a video shot is sensed between k and (k+1) frames:

Where zsm is the second largest discontinuity value within the window. The parameter α can be understood as the shape parameter of the pattern illustrated in the above figure.

Unlike to scene cuts (indeed, the detection of the scene cut makes an encoder to produce P or I frame), the detection of gradual transitions can’t impact on encoding process. However, detection of scene changes (regardless abrupt or gradual) may supply auxiliary info for an application (e.g. video index).

As per sensing of gradual transitions (e.g. wipes), consideration of Z between pairs of consecutive frames makes these transitions non-distinguishable. However, if we take Z between k-th and (k+n)-th frame (where ‘n’>1 and comparable to transition length) then we can apply the same method of detection scene cuts.

Note:

- Instead of the proposed histogram metrics there are other metrics. For example, the pixel difference metric( like SAD) can be applied: the average absolute intensity difference between co-located pixels of frames k and k+n. The pixel metric is sensitive to motion and can be reliable only it’s coupled with Motion Compensation. Because Motion Compensation is more time-consuming tool than computing of histogram, the histogram metric is more beneficial. On the other hand, it’s reported that motion sensitivity in the pixel difference metric can be avoided if count the pixels that change considerably from one frame to another.

Appendix A:

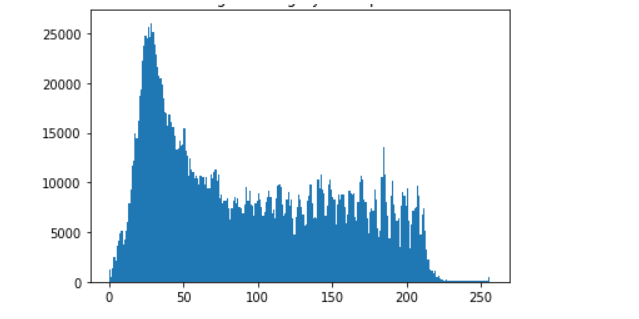

Compute discontinuity factor Z(k,k+1) between successive images normalized to the frame resolution NxM:

Z(k,k+1) =Z(k,k+1) / N*M

Battlefield

Unravel

import cv2

import numpy as np

from matplotlib import pyplot as plt

gray_img1 = cv2.imread(r’C:\Tools\battlefield.jpg‘, cv2.IMREAD_GRAYSCALE)

gray_img2 = cv2.imread(r’C:\Tools\unravel.jpg‘, cv2.IMREAD_GRAYSCALE)

hist,bins = np.histogram(gray_img1,256,[0,256])

plt.hist(gray_img1.ravel(),256,[0,256])

plt.show()

# histogram of battlefield

hist2,bins = np.histogram(gray_img2,256,[0,256])

plt.hist(gray_img2.ravel(),256,[0,256])

plt.show()

# normalized to resolution

zkk1 = sum(np.abs(hist-hist2))/(gray_img1.shape[0]*gray_img1.shape[1])

print(zkk1)

0.7283159722222222

Appendix B

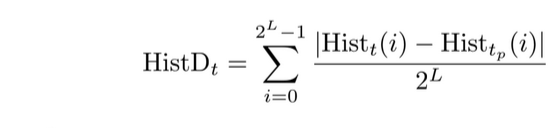

In the paper “Rate-distortion Optimization Using Adaptive Lagrange Multipliers”, by Fan Zhang et al. the following method for detection of scene cuts is proposed:

- summing absolute differences between histograms of current and the previous frame

- dividing the sum by 2^L, where L=8

if HistDt > 0.002 then the scene cut detected

23+ years’ programming and theoretical experience in the computer science fields such as video compression, media streaming and artificial intelligence (co-author of several papers and patents).

the author is looking for new job, my resume

Your style is so unique compared to many other people. Thank you for publishing when you have the opportunity,Guess I will just make this bookmarked.2

Useful information. Fortunate me I discovered your web site accidentally, and I’m surprised why this coincidence did not took place in advance! I bookmarked it.

wonderful post.Never knew this, thanks for letting me know.

Hi there! This post couldn’t be written any better! Reading through this post reminds me of my previous room mate! He always kept talking about this. I will forward this article to him. Pretty sure he will have a good read. Thank you for sharing!

When I initially commented I clicked the “Notify me when new comments are added” checkbox and now each time a comment is added I get several emails with the same comment. Is there any way you can remove people from that service? Thank you!

I’m not sure where you’re getting your information, but good topic. I needs to spend some time learning more or understanding more. Thanks for fantastic information I was looking for this information for my mission.

technical papers + rich experience (above 23 years)

Good write-up, I am normal visitor of one?¦s blog, maintain up the excellent operate, and It’s going to be a regular visitor for a lengthy time.

I was recommended this web site by my cousin. I’m not sure whether this post is written by him as no one else know such detailed about my difficulty. You are amazing! Thanks!

Some genuinely great info , Sword lily I observed this.

Hello my friend! I wish to say that this article is awesome, nice written and include almost all vital infos. I’d like to see more posts like this.

It’s actually a great and useful piece of information. I am glad that you shared this useful information with us. Please keep us informed like this. Thanks for sharing.

Everyone loves what you guys tend to be up too. This kind of clever work and reporting! Keep up the fantastic works guys I’ve added you guys to our blogroll.

I truly prize your piece of work, Great post.

I believe you have noted some very interesting details, appreciate it for the post.