Content

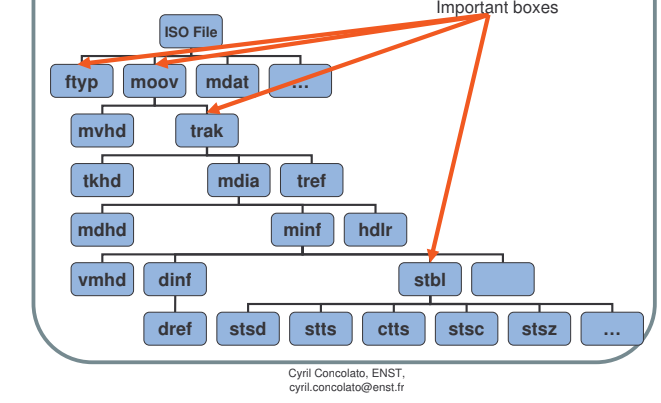

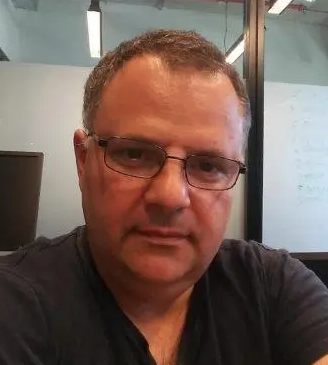

MP4 Container is mainly specified by ISO/IEC 14496-12, although some boxes are elaborated by ISO/IEC 14496-15 and ISO/IEC 14496-14.

MP4 Container is tailored to contain video and audio elementary streams plus a context information (commonly called as meta-data) necessary for correct playback and editing. The ISO/IEC 14496-12 media file format (ISOBMFF) is a file format that contains a time-based multimedia data (video and/or audio and/or still images).

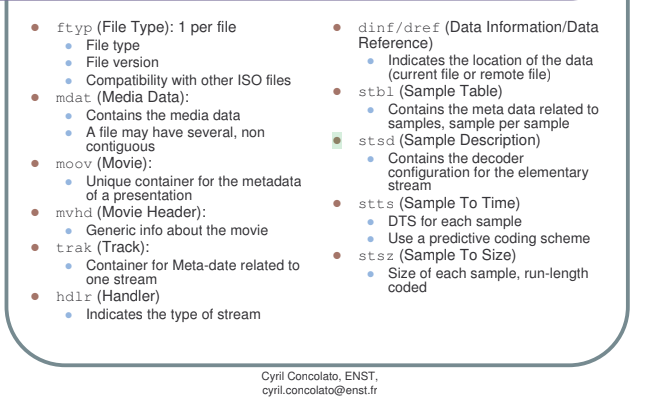

In a ISOBMFF file all data is contained in boxes, and no other data outside the boxes is allowed. Each media stream is contained in its own track where a track is a sequence of timed samples.

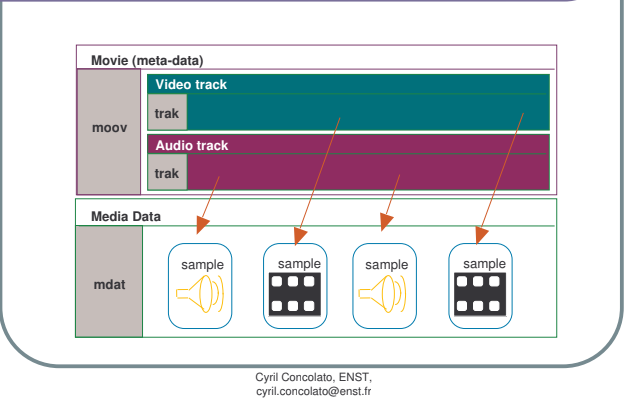

Roughly speaking, MP4-file is divided into two main sections: metadata (‘moov‘) and mdat, where the metadata contains information about what the data is: general info, timing information of each video/audio frame, offsets to each audio/video frame and so forth.

The mdat box usually contains video and audio frames usually in interleaved order (although so called ‘flat’ ordering is also used). Notice that video frames are ‘unframed’, i.e. not prefixed by start-codes. However, we can easily access any video/audio frame by an offset derived from corresponding tables in meta-data.

Pros of MP4 Container

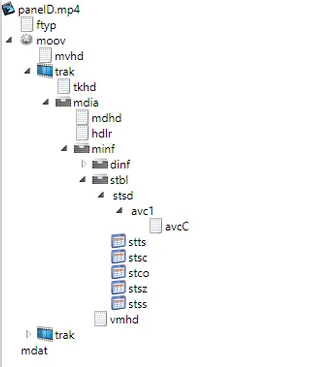

1. Easy to access a selected frame. For example, if you wish to access the video frame #N in ts-stream you need traverse the stream until the N-frame is encountered. In MP4 Container you derive an offset from ‘stco’ and ‘stsz’ tables in meta-data.

2. Easy to know how many frames are kept in the file. You get the size of stsz-table in meta-data.

3. Easy access Random Access Frame – use stss-table. The stss-atom refers to sync-samples (or random-access frames, in AVC or HEVC usually IDR frames serve as ‘sync’ points) for fast forward/backward and other trick modes. Stss-atom is not mandatory. According to the Mpeg File System standard: If the sync sample box is not present, every sample is a random access point.However, many commercial mp4-files are lack of stss-box and not all frames are random access points

4. Easy to perform ‘stream thinning’ and reduction of frequency, i.e. to remove unused for reference frames. Frame Dependency info is located into sdtp-box (optional). The sdtp-box contains a table of dependency flags (8-bits each entry), the size of the table is taken from corresponding stsz-table size. It’s worth mentioning that the syntax of sdtp-box in MP4-format and Quick Time differ.

5. Ability to download and play the file at the same time, provided ‘moov’ box is at begining (prior to ‘mdat’)

Quick-Time format (for each video sample):

bit[7] – reserved to 1

bit[6] – if set to 1 then POC of the current frame might be greater than the POC of the next frame (the frame reordering takes place).

bit[5] – if I-picture set 1, otherwise 0

bit[4] – if not I-picture set 1, otherwise 0

bit[3] – if ref_idc of slice NALU is zero then set bit[3]=1, otherwise 0

bit[2] – if ref_idc of slice NALU is non-zero then set bit[2]=1, otherwise 0

bit[1] – 0 – picture is redundant, otherwise 1 (redundant pictures are highly unlikely in mp4-files, therefore this bit rarely is found 0)

MP4 Format (for each video sample):

bit[1:0] – set 10b , this implies that no redundant pictures present

bit[3:2] – set 10b if ref_idc of the current frame is 0, otherwise set 01b

bit[5:4] – set 10b if current frame is I-picture, otherwise set 01b

Cons of MP4 Container

- Cutting of GOPs is uneasy. It’s difficult to cut a GOP (a closed GOP, i.e. starting with a key frame) from MP4 Container. Even if you remove the GOP from mdat, you need update tables in metadata (absolute addresses of frames changed).

Notes:

- In addition to Mpeg MP4 Container there is its ramification called as QuickTime Container. Notice that QuickTime container is not a superset of Mpeg MP4 one and not a subset (e.g. interpretation of entries in sdtp-table in MP4 is different from that in QuickTime). Anyway, QuickTime Container is similar to MP4 Container.

- i recommend MP4 Explorer as a good free mp4-file analyzer.

- There is a special box in MP4 metadata – ‘edit list’. With the edit-list you can instruct a player to start playback not with the first frame but from a point in the middle.

MP4 structure:

‘

Notes:

- mdat is not box-structured

- sample timing is determined in the following boxes:

“mdhd” – specifies the timescale akin to current track.

“stts” – provides decoding timestamps (DTS) for each sample, values are signaled as sample detlas of timescales, coded as run-length (if differences are equal).

“ctts” – provides presentation timestamps for each sample, when needed (e.g. in case of B-frames), each “ctts” value is offset from corresponding “stts” one.

How get N-th video frame (AVC/H.264 or HEVC/H.265)?

In case of AVC/H.264 or HEVC/H.265 each NAL unit is prefixed by NALUnitLength (4 or 2 bytes), where NALUnitLength in turn is specified in stsd-box.

An example of video frame:

So, in order to get to Slice NAL you need skip over AUD (access unit delimiter), then skip over SEI.

Video is stored in in mdat box in runs of successive video frames. Such run of consecutive video frames is called chunk. In the meta-data there is a table stco-box where addresses of each chunk stored.

If we wish to get the address of the first video frame then we have to extract the address of the first chunk.

If we wish to get the address of the second video frame then do the following:

- Check that the first chunk contains more than one frame. There is a mandatory table stsc in meta-data which specifies the amount of video frames in each chunk.

- If the fist chunk contains only one frame then the address of the second chunk is actually the start of the second frame.

- Otherwise, take the size of the first frame from stsz-box and skip over the first frame to get the start of the second frame.

We outline the algorithm of finding address of N-frame:

Determine video-track number

Read N first entries of stsz-table in SizesList

Parse stco-box to derive chunk addresses and keep the addresses in ChunkAddressList

Parse stsc-box to derive chunk length in frames, keep the chunk lengths in FramesinChunkList

# Specify the chunk where N-th frame is located

chunkNo = 0, totalFrames =0

While totalFrames>N

totalFrames = totalFrames + FramesinChunkList [chunkNo]

chunkNo++

EndWhile

chunk = chunkNo – 1 # ‘chunk’ is the number of the chunk where N-th frame located

# specify the first frame number in the ‘chunk’

NumFramesInChunk = FramesinChunkList [chunkNo-1]

FirstFrameInChunk = totalFrames – NumFramesInChunk

StartAddr = ChunkAddressList[chunk]

For k=0 to NumFramesInChunk

If FirstFrameInChunk+k == N

Return StartAddr

StartAddr = StartAddr + SizesList[ FirstFrameInChunk + k ]

Metadata (‘moov’) is not mandatory to be located prior to media data (‘mdat’). The metadata can be placed after media data. In such case a progressive (or faststart) playback is not feasible (a player should download all media data and then getting metadata and then starting playback).

Fortunately, ffmpeg has an option ‘-movflags faststart’ to re-arrange boxes in mp4-file such that metadata located prior to media data (‘mdat’). To move the medata to the beginning use the flag ‘-movflags faststart’:

Example

ffmpeg -i slow_start.mp4 -c:a copy -c:v copy -movflags faststart fast_start.mp4

Note on Bitrate Box (‘btrt’)

This box contains an auxiliary information – maximal and average rate in bits/second. How to interpret the bitrate. There are many ways to compute the bitrate and each way might provide different result. Two parameters specify the bitrate measurement: the window length (in seconds or in frames), the step size (in seconds or in frames), for example:

window-length = 1s, step-size = ‘frame_duration’ or 1/fps(overlapping windows)

window-length = 1s, step-size = 1s (because the step-size is equal to the window-length all windows are non-overlapping). Note, if the frame rate is 29.97 then the step=1s is not achievable.

The ISO base media file format (Part 12, edition 2015) specifies the parameter maxBitrate in btrt-box as follows:

maxBitrate gives the maximum rate in bits/second over any window of one second.

So, one deduces from this statement that window-size is 1s. However, what’s the step-size=”frame duration” or 10ms or another magnitude? Practically it’s uneasy to measure the bitrate with the step size below the frame duration.

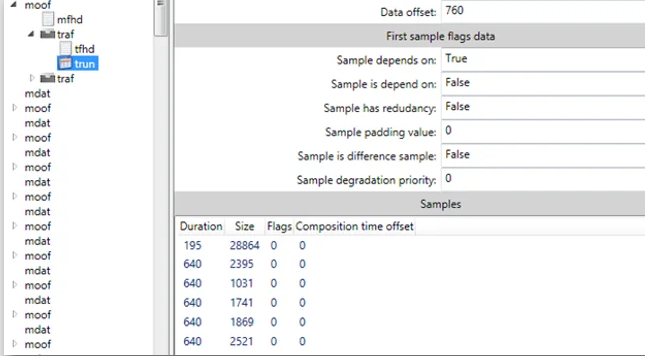

Fragmented MP4

There is the pdf-file with more detailed explanation of fragmented mp4 structure: Fragmented mp4 file structure is described Read More on Fragmented MP4

moov [moof mdat+]+ mfra

ffmpeg supports encapsulation of H264/AVC elementary stream into fragmented mp4 (fmp4):

ffmpeg -i <h264-file> -c:v copy -f mp4 -movflags frag_keyframe+empty_moov -y output.mp4

In the above command ffmpeg splits the input H264/AVC elementary stream into segments at key-frames.

However, ffmpeg has a bug in encapsulation into fmp4 – no composition time offsets are signaled:

If B frames are used in the input stream then sample composition time offset should be signaled in trun-box of each segment (moof).

For information, for every sample in the segment the trun-box specifies the following fields:

- Sample size in bytes

- Sample duration in units specified in tkhd box (time_scale field)

- Sample composition time offset, actually equal to pts-dts.

- Sample flags

Each field is signaled optionally, if the field is not present then default values (specified in another atom – tfhd) are taken.

ffmpeg does not puts sample compostion time offsets and reordering jitter is observed on some players (e.g. QuickTime player).

Details on Fragmented MP4

General structure of fragmented mp4-file (optional boxes are suffixed by *):

ftyp

moov { related to all segments }

mvhd

trak { may be multiple number of traks }

tkhd

mdia

mdhd

hdlr

minf

dinf

stbl

stsd

stsz { may be empty}

…

stsc { may be empty}

mvex*

udta*

moof { meta data of current fragment }

mfhd

traf { may be multiple number of trafs }

mdat

moof

….

mfra*

tfra*

mfro

How to determine whether an input mp4-file is regular or fragmented? The easiest way is to look for moof-boxes which are mandatory and specific in the fragmented mp4-file structure.

Fragmented mp4-file can be 100% fragmented, i.e. all media data is dispersed among moofs (in ffmpeg there is a switch ’empty_moov’ to enforce 100% fragmentation) or partly fragmented when some media data is located within the mdata-box associated with the moov-segment (for example, the first fragment can be coupled with moov-box.

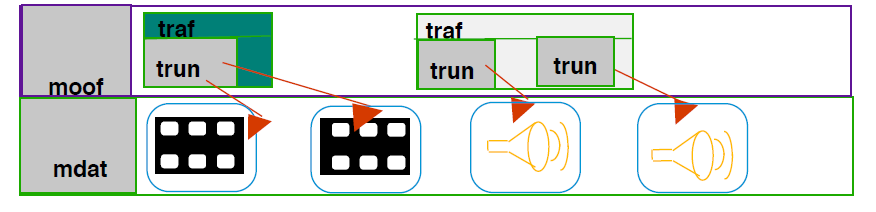

Movie Fragment:

Fragments are always signaled in pairs – ‘moof-mdat’.Usually each GOP is stored in a separate moof-mdat pair (it’s called fragmentation at key frames if each GOP starts from a key frame). In addition to ‘moof’ and ‘mdat’ boxes the fragmet contains also ‘mfhd’ and ‘traf(s)’ boxes.

Fragments can be in two modes:

- Single Track: moof-mdat atoms for each track, in such case one traf box is signaled.

For example the k-th fragment (or k-th moof/mdat pair) contains only audio fragment while the following fragment carries video.

- Multiple Track: fragments (moof/mdat pairs) contain several traks (as a result several traf boxes are signaled).

mfhd-box:

mfhd contains sequence_number for integrity check. If there is a gap in sequence_numbers of successive moofs then apparently a fragment got lost.

tfhd-box:

tfhd-box carries the following fields

flags

base-data-offset-present

sample-description-index-present

…

base_data_offset – signaled when base-data-offset-present is 1. My suggestion to set to the start of each moof and update all offsets within the current moof beginning from the moof-start.

trun-box:

ffmpeg produces slightly buggy video sample durations in the first trun-box: the first sample duration is much smaller than expected (1/fps).

Generally speaking, no reason to signal video frame durations since we can specify default sample duration in tfhd-box as 1/fps in units of moov/tkhd.

Notice that the frame duration is specified as DTS(n)-DTS(n-1), for the frame 0 the duration should be taken from default_frame_duration.

If sample flags are signaled n the original we need update them and signal in the output file.

mvex box:

a. mehd – optionally, specify duration of the all file. The media duration actually corresponds to the longest track duration (including all movie fragments).

mehd-box contains only one parameter ‘frame_duration’ in units specified in mvhd-box.

if (version==1) {

unsigned int(64) fragment_duration;

} else { // version==0

unsigned int(32) fragment_duration;

}

b. trex – mandatory, a separate trex-box is signaled for each trak.

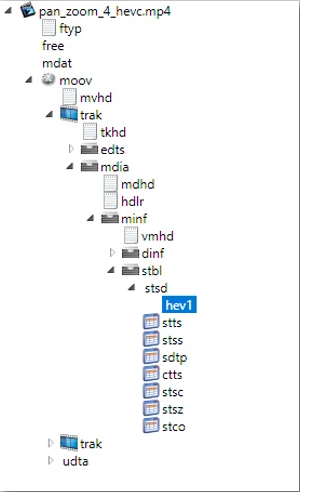

stsd-box contains specific info related to elementary stream of a given track (notice that each track contains its own stsd-box). If the track contains AVC/H.264 stream then ‘avc1/avcC’ must be present (mandatory), here ‘avcC’ is atom (i.e. it does not contains sub-boxes):

If the track contains HEVC/H.265 stream then either ‘hev1’ or ‘hvc1’ must be present (mandatory):

The boxes ‘avcC’, ‘hev1’ contains specific information as frame resolution, video profile and level and high-level headers (SPS, PPS, etc.). Because high-level headers are located in ‘stsd’ box, it’s redundant to insert them in ‘mdat’ (although many mp4-files contain for example SPS in both ‘stsd’ and ‘mdat’, moreover SPS is repeated in ‘mdat’ each IDR-frame).

Python Scripts to Process mp4-files

Parse stsd-box of video track in mp4-file

The python script ParseMetaHdrsOfVideoInMP4.py (adapted for Python 2.x) parses stsd-box of video track in mp4-file (actually it parses avcC, hev1 or hvc1 boxes within the stsd-box) and prints relevant video-stream specific info, In addition, the script dumps SPS, PPS and VPS (in case of HEVC) headers in separate binary files (by adding the start codes 00 00 00 01).

Usage:

-i input mp4 file

-d if set then all high-level headers dumped ( by default as vps.bin, sps.bin and pps.bin)

-v specify the file name of dumped vps header (relevant if ‘-d’ set)

-s specify the file name of dumped sps header (relevant if ‘-d’ set)

-p specify the file name of dumped pps header (relevant if ‘-d’ set)

Exampe [HEVC stream]:

python ParseMetaHdrsOfVideoInMP4.py -i test_hevc.mp4

video trak number 0

Num of descriptors 1

hevc video found

resolution 1920×1080

Configuration Version 0x1

profile_idc 0x1

general_profile_compatibility_flags 60000000

progressive_source_flag 1

interlaced_source_flag 0

non_packed_constraint_flag 0

frame_only_constraint_flag 1

level_idc 0x7b

min_spatial_segmentation_idc 0x0

parallelismType 0

chromaFormat 1

luma_bit_depth 8

chroma_bit_depth 8

avgFrameRate 0

constantFrameRate 0

numTemporalLayers 1

temporalIdNested 1

nal lengthSize 4

high level headers 4 array_completeness 0

header type VPS

nal_count 1

hdr_len 24 array_completeness 0

header type SPS

nal_count 1

hdr_len 41 array_completeness 0

header type PPS

nal_count 1

hdr_len 7 array_completeness 0

header type SEI

nal_count 1

hdr_len 2050

Notice if the parameter array_completeness of SPS/PPS/VPS is 1 then no SPS header is not present in ‘mdat’, otherwise it may present.

Traverse Fragmented MP4 file and prints H.264/AVC frame addresses (absolute), frame types and sizes

The python script H264PictureStatsFromFragMP4.py (adapted for the version 2.x):

Usage:

python H264PictureStatsFromFragMP4.py <mp4-file (fragmented with extension ‘mp4’ or ‘mov’)>

Example:

python H264PictureStatsFromFragMP4.py frag_test1.mp4

idr addr 1383, size 52022

ipb addr deb9, size 43076

ipb addr 186fd, size 45417

ipb addr 23866, size 43996

ipb addr 2e442, size 37139

ipb addr 37555, size 45353

ipb addr 4267e, size 50923

ipb addr 4ed69, size 51348

ipb addr 5b5fd, size 52766

ipb addr 6841b, size 48775

ipb addr 742a2, size 50086

ipb addr 80648, size 48631

ipb addr 8c43f, size 47915

ipb addr 97f6a, size 47684

ipb addr a39ae, size 46941

ipb addr af10b, size 46394

ipb addr ba645, size 50040

ipb addr c69bd, size 44995

ipb addr d1980, size 50061

ipb addr ddd0d, size 45079

…..ipb addr 6c60973, size 47789

ipb addr 6c6c420, size 51143

ipb addr 6c78be7, size 46958

ipb addr 6c84355, size 50907

ipb addr 6c90a30, size 46129

ipb addr 6c9be61, size 57305

ipb addr 6ca9e3a, size 51191

ipb addr 6cb6631, size 49333

ipb addr 6cc26e6, size 58087

ipb addr 6cd09cd, size 45869

ipb addr 6cdbcfa, size 50019

number of frames 2373,

number of IDRs 5

moof cnt 5, video trafs 5

Use Case: stts and ctts boxes

Decoding times of each sample in a track of mp4-file are squeezed in the box ‘stts’, this box is mandatory, while presentation times are squeezed in another box ‘ctts’. For audio this ‘stts’ box is sufficient since decoding and presentation times coincide (assumed that decoding is performed instantaneously).

However, for video decoding and presentation times can differ due to reordering (some frames should wait until displayed). Due to reordering presentation times are not necessarily monotonically ascending, while decoding times must be monotonically ascending.

The script ParseTimingInfoInMp4 parses decoding (‘stts’) and presentation (‘ctts’) tables of video track and prints decoding and presentation time

for each sample in seconds, plus the differences between presentation and decoding times in ms (important note: commands in edit-list are not considered)

Usage:

-i input mp4-file file

-v verbose mode, print all intermediate info (default false)

Example [decoding and presentation times are in unist of seconds]:

python ParseTimingInfoInMp4.py -i test.mp4

0 dts = 0.0000 s, pts = 0.1333 s, diff in ms 133.33

1 dts = 0.0667 s, pts = 0.4000 s, diff in ms 333.33

2 dts = 0.1333 s, pts = 0.2667 s, diff in ms 133.33

3 dts = 0.2000 s, pts = 0.2000 s, diff in ms 0.00

4 dts = 0.2667 s, pts = 0.3333 s, diff in ms 66.67

5 dts = 0.3333 s, pts = 0.6667 s, diff in ms 333.33

6 dts = 0.4000 s, pts = 0.5333 s, diff in ms 133.33

7 dts = 0.4667 s, pts = 0.4667 s, diff in ms 0.00

8 dts = 0.5333 s, pts = 0.6000 s, diff in ms 66.67

9 dts = 0.6000 s, pts = 0.9333 s, diff in ms 333.33

10 dts = 0.6667 s, pts = 0.8000 s, diff in ms 133.33

11 dts = 0.7333 s, pts = 0.7333 s, diff in ms 0.00

12 dts = 0.8000 s, pts = 0.8667 s, diff in ms 66.67

13 dts = 0.8667 s, pts = 1.2000 s, diff in ms 333.33

14 dts = 0.9333 s, pts = 1.0667 s, diff in ms 133.33

15 dts = 1.0000 s, pts = 1.0000 s, diff in ms 0.00

16 dts = 1.0667 s, pts = 1.1333 s, diff in ms 66.67

Get H264 Video Frame Statistics in MP4 File

The python script GetVideoFramesStatisticsInMP4.py (adapted for python 3.x) is tailored to print video frame types (i,p,b or idr), sizes and absolute addresses (from the mp4 file begininning) . The mp4 file must be a regular non-fragmented media file containing h264 stream.

For each frame the following data is printed:

frame counter, frame size (in bytes), frame address from the beginning of the file, frame type ‘ipb’ or idr

at the end the whole statistics is printed:

Total Samples: <total_number_samples>

IDR count: <number_IDRs>

SPS count: 0 <number_SPS> // it’s not uncommon SPS is not present in mdat-box, since SPS already signaled in metadata.

PPS count: 0 <number_PPS> // it’s not uncommon PPS is not present in mdat-box, since SPS already signaled in metadata.

SEI count: 0

Example:

python GetVideoFramesStatisticsInMP4.py box.mp4

0, Size: 47183, Addr: 0x47d5, Type: idr

0, Size: 47183, Addr: 0x47d5, Type: idr

1, Size: 1353, Addr: 0x10024, Type: ipb

2, Size: 249, Addr: 0x1056d, Type: ipb

3, Size: 144, Addr: 0x109a8, Type: ipb

4, Size: 241, Addr: 0x10bd9, Type: ipb

5, Size: 3348, Addr: 0x10e6b, Type: ipb

6, Size: 658, Addr: 0x11ec1, Type: ipb

7, Size: 290, Addr: 0x122f4, Type: ipb

8, Size: 435, Addr: 0x125b7, Type: ipb

9, Size: 6492, Addr: 0x1290b, Type: ipb

10, Size: 2042, Addr: 0x145a9, Type: ipb

11, Size: 908, Addr: 0x14f44, Type: ipb

12, Size: 551, Addr: 0x15471, Type: ipb

13, Size: 11721, Addr: 0x159da, Type: ipb

14, Size: 2385, Addr: 0x18944, Type: ipb

15, Size: 360, Addr: 0x19436, Type: ipb

16, Size: 725, Addr: 0x1973f, Type: ipb

17, Size: 7955, Addr: 0x19d56, Type: ipb

18, Size: 2265, Addr: 0x1be0a, Type: ipb

19, Size: 724, Addr: 0x1c884, Type: ipb

20, Size: 609, Addr: 0x1ce9a, Type: ipb

21, Size: 9987, Addr: 0x1d29c, Type: ipb

22, Size: 1856, Addr: 0x1fb40, Type: ipb

23, Size: 1125, Addr: 0x20421, Type: ipb

24, Size: 766, Addr: 0x20bc8, Type: ipb

25, Size: 7488, Addr: 0x21067, Type: ipb

…

451, Size: 2100, Addr: 0x1ce53c, Type: ipb

452, Size: 740, Addr: 0x1cef11, Type: ipb

453, Size: 470, Addr: 0x1cf537, Type: ipb

454, Size: 1835, Addr: 0x1cf8ae, Type: ipb

455, Size: 852, Addr: 0x1d017a, Type: ipb

Total Samples: 456

IDR count: 3

SPS count: 0

PPS count: 0

SEI count: 0

Note:

it’s not recommended to use ffprobe, it’s buggy.

For example, for box.mp4 file, ffprobe reports on two I-pictures, although the file contains three I-pictures (acutally three IDRs)

ffprobe -hide_banner -v panic -select_streams v:0 -show_frames box.mp4 | findstr pict_type=I

pict_type=I

pict_type=I

Moreover, the number of frames reported by ffprobe is 455, although the mp4-file contains 456 frames:

ffprobe -v error -count_frames -select_streams v:0 -show_entries stream=nb_read_frames box.mp4

[h264 @ 0000014abf953780] A non-intra slice in an IDR NAL unit.

[h264 @ 0000014abf953780] decode_slice_header error

[h264 @ 0000014abf9553c0] A non-intra slice in an IDR NAL unit.

[h264 @ 0000014abf9553c0] decode_slice_header error

[STREAM]

nb_read_frames=455

[/STREAM]

Parse Metadata of stsd-box

stsd-box contains configuration for a elementary stream decoder (e.g. sequence header). The python script ParseStsdBoxInMP4 parses stsd-box (currently only for AVC/H.264).

Example:

python ParseStsdBoxInMP4.py box.mp4

video trak number 1

Num of descriptors 1

avc video found, avc1-box content:

resolution 640×480

Configuration Version 0x1

profile_idc 0x64 (100)

profile_compatibility 0x0 (0)

AVC Level 0x1e (30)

nal lengthSize 4

sps len 25

SPS parsing:

4 Start Code Emulation Bytes Exist and Removed

profile_idc 100

constraint_flags 0 0 0 0

level 30

sps_id 0

chroma_format_idc 1

depth_luma 8, depth_chroma 8

qpprime_y_zero_transform_bypass_flag 0

log2_max_frame_num 4

pic_order_cnt_type 0

log2_max_pic_order_cnt_lsb 6

max_num_ref_frames 4

gaps_in_frame_num_value_allowed_flag 0

width 640, height 480

frame_mbs_only_flag 1

direct_8x8_inference_flag 1

frame_cropping_flag 0

vui_parameters_present_flag 1

aspect_ratio_info_present_flag 1

aspect_ratio_idc 1

overscan_info_present_flag 0

video_signal_type_present_flag 0

chroma_loc_info_present_flag 0

timing_info_present_flag 1

num_units_in_tick 1, time_scale 50, fixed_frame_rate_flag 1

nal_hrd_parameters_present_flag 0

vcl_hrd_parameters_present_flag 0

pic_struct_present_flag 0

bitstream_restriction_flag 1

motion_vectors_over_pic_boundaries_flag 1

max_bytes_per_pic_denom 0

max_bits_per_mb_denom 0

max_mv_length_horizontal 1024

max_mv_length_vertical 1024

num_reorder_frames 2

max_dec_frame_buffering 4

pps len 6

PPS parsing:

pic_parameter_set_id 0

seq_parameter_set_id 0

Entropy Mode CABAC

bottom_field_pic_order_in_frame_present_flag 0

num_ref_idx_l0_default_active 3

num_ref_idx_l1_default_active 1

weighted_pred_flag 1, weighted_bipred_idc 2

pic_init_qp 23

chroma_qp_index_offset -2

deblocking_filter_control_present_flag 1

constrained_intra_pred_flag 0

redundant_pic_cnt_present_flag 0

Count Frames in H264 Video Trak of mp4-file

The script CountVideoFramesH264MP4 (adapted for python 3.x) is tailored to report on number of video frames according to the number of items in stsz-box in mp4-file’s metadata.

Usage:

-i input mp4 file

-v if true then some parametrs of metadata are printed, (default false)

Example (with verbose):

python CountKeyFramesH264MP4.py -i test.mp4 -v

video track number 0

width 1920

width 1440

NAL Length field size 4 bytes

Total number of frames (declared in meta-data) 4157

Example (without verbose):

python CountKeyFramesH264MP4.py -i test.mp4

Total number of frames (declared in meta-data) 4157

Example (video trak is absent):

python CountKeyFramesH264MP4.py -i test_audio.mp4

Traceback (most recent call last):

…

raise Exception(‘Video Trak Not Found‘)

Exception: Video Trak Not Found

Get NALU Statistics in Video Track of mp4-file Containing H264/AVC

The script GetMp4NalLayout (adapted for python 3.x) is tailored to report on all NALUs in a video track of mp4-file containig h264/avc video format only.

Usage:

-i input mp4 file containing AVC/H264 video

-n number frames to process, if 0 then the whole stream processed

-v if set then each NALU data is printed (default false)

Example [general statistics only]:

python GetMp4NalLayout.py -i test.mp4

File test.mp4

Total NAL Statistics:

Total NALs 8314

Frame Count 4157

auds 4157

sps 0

pps 0

sei 0

regular slices 4073

idr slices 84

Fillers 0

EndOfSeq 0

EndOfStream 0

Example [full statistics only]:

For each NALU the following data is printed:

Frame count (counting from zero) , NAL name and idx, NAL offset in hex (in mp4-file), size of the NAL in hex-format and in decimal format in bytes

Examples:

FrameCnt 4145, Nal slice (1 ), refIdc 1, offset 2530dcce, size 21138 (dec 135480)

FrameCnt 4149, Nal aud (9 ), refIdc 0, offset 25394127, size 2 (dec 2)

python GetMp4NalLayout.py -i test.mp4 -v

…

FrameCnt 4144, Nal aud (9 ), refIdc 0, offset 252ecba9, size 2 (dec 2)

FrameCnt 4144, Nal slice (1 ), refIdc 1, offset 252ecbaf, size 20f1c (dec 134940)

FrameCnt 4145, Nal aud (9 ), refIdc 0, offset 2530dcc8, size 2 (dec 2)

FrameCnt 4145, Nal slice (1 ), refIdc 1, offset 2530dcce, size 21138 (dec 135480)

FrameCnt 4146, Nal aud (9 ), refIdc 0, offset 2532f002, size 2 (dec 2)

FrameCnt 4146, Nal slice (1 ), refIdc 1, offset 2532f008, size 220d3 (dec 139475)

FrameCnt 4147, Nal aud (9 ), refIdc 0, offset 253512d8, size 2 (dec 2)

FrameCnt 4147, Nal slice (1 ), refIdc 1, offset 253512de, size 216bc (dec 136892)

FrameCnt 4148, Nal aud (9 ), refIdc 0, offset 25372ba2, size 2 (dec 2)

FrameCnt 4148, Nal slice (1 ), refIdc 1, offset 25372ba8, size 2138e (dec 136078)

FrameCnt 4149, Nal aud (9 ), refIdc 0, offset 25394127, size 2 (dec 2)

FrameCnt 4149, Nal slice (1 ), refIdc 1, offset 2539412d, size 20d66 (dec 134502)

FrameCnt 4150, Nal aud (9 ), refIdc 0, offset 253b5090, size 2 (dec 2)

FrameCnt 4150, Nal idr (5 ), refIdc 1, offset 253b5096, size 6a6df (dec 435935)

FrameCnt 4151, Nal aud (9 ), refIdc 0, offset 2541f972, size 2 (dec 2)

FrameCnt 4151, Nal slice (1 ), refIdc 1, offset 2541f978, size 211c0 (dec 135616)

FrameCnt 4152, Nal aud (9 ), refIdc 0, offset 25440d34, size 2 (dec 2)

FrameCnt 4152, Nal slice (1 ), refIdc 1, offset 25440d3a, size 2127b (dec 135803)

FrameCnt 4153, Nal aud (9 ), refIdc 0, offset 254621b2, size 2 (dec 2)

FrameCnt 4153, Nal slice (1 ), refIdc 1, offset 254621b8, size 217b9 (dec 137145)

FrameCnt 4154, Nal aud (9 ), refIdc 0, offset 25483b6d, size 2 (dec 2)

FrameCnt 4154, Nal slice (1 ), refIdc 1, offset 25483b73, size 21db5 (dec 138677)

FrameCnt 4155, Nal aud (9 ), refIdc 0, offset 254a5b25, size 2 (dec 2)

FrameCnt 4155, Nal slice (1 ), refIdc 1, offset 254a5b2b, size 22e4e (dec 142926)

FrameCnt 4156, Nal aud (9 ), refIdc 0, offset 254c897d, size 2 (dec 2)

FrameCnt 4156, Nal slice (1 ), refIdc 1, offset 254c8983, size 220f0 (dec 139504)

File test.mp4

Total NAL Statistics:

Total NALs 8314

Frame Count 4157

auds 4157

sps 0

pps 0

sei 0

regular slices 4073

idr slices 84

Fillers 0

EndOfSeq 0

EndOfStream 0

Check whether mp4-file Contains HEVC or H264 Video Stream

If mp4 file contains a video track and stsd-box contains avc1-box then this video is h264, if stsd-box contains hev1 or hvc1 then the video is HEVC.

The script CheckVideoTypeInMP4 checks whether stsd-box of video track contains ‘avc1’ box or hev1/hvc1; and thus it determine video stream type: H264 or HEVC.

Usage:

-i input mp4 file

-v verbose mode (default false)

Example: with verbose mode

python CheckVideoTypeInMP4.py -i test.mp4 -v

video trak number 0

stsd-box: Num of descriptors 1

This file contains H264 video

resolution 640×480

23+ years’ programming and theoretical experience in the computer science fields such as video compression, media streaming and artificial intelligence (co-author of several papers and patents).

the author is looking for new job, my resume

fragment input ordinary mp4-file into the fragmented, each fragment is ~1s of duration, by default video and audio are in separated moof-boxes

mp4fragment.exe test .mp4 –fragment-duration 1000 test_f.mp4

Note: If only the video track is supposed to be converted, use ‘–track video’ and the output file will contain only video data.

mp4fragment.exe test .mp4 –track video –fragment-duration 1000 test_f.mp4