There four main approaches in encoding stereo video in the format AVC/H.264 or HEVC/H.265:

1) [Multi-view Coding (MVC)]

AVC/H.264 as well as H.265/HEVC supports a sort of scalable coding SVC (which officially is called – MVC) to encode multi-view video by full exploitation of inter-view and intra-view redundancies. That method is reported to provide the best results in coding efficiency but on the penalty of booming complexity. In addition, MVC is not supported by most of hw players and by a significant portion of hw encoders.

2) [Simulcast]

This is most straightforward approach – to encode each view as a separate self-contained stream. That appoach is simple and sub-optimal since inter-view redundancy is not exploited at all.

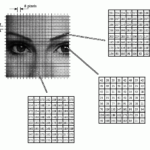

3) [Spatial Frame Packing]

we build a sequence of super-frames from two co-timed views. There are several options to pack views – vertically, horizontally or line-interleaved (even-numbered lines belong to the left view and odd lines belongs to the right view respectively). In case vertical or horizontal packing inter-view redundancy is poorly exploited (long MVs are required to reach co-located areas of same view). If views are interleaved line by line then the inter-view redundancy is much better exploited, but “bad weaves” are present (as in the interlaced video) and these artifacts tend to diminish a gain in coding efficiency.

4) [Time Frame Interleaving]

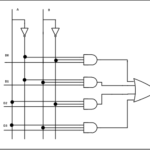

the left view frame is followed by the right view and vice versa, consequently the frame rate is doubled. To exploit inter-view redundancy one needs setting the number of reference frames to be at least two. In such case the pre-previous reference is tailored for intra-view (or temporal) prediction while the previous reference stands obviously for inter-view prediction. At this point it’s worth to utilize the weighted bi-prediction to blend intra-view and inter-view predictors, where the weights are explicitly signaled at the frame header.

So, explicit weighted bi-prediction can provide some gain in coding efficiency.

Let’s imagine a near-static scene (whenever a current frame is almost replica of the previous one). If the immediate previous reference (ref_idx=0) belongs to same view (e.g. in the case of the spatial frame packing mode) then it’s obviously most of MBs are chosen as skipped MBs. However, if the previous frame belongs to opposite view, probably most of blocks are displaced due to parallax (all are displaced, the blocks close to the camera are displaced stronger than those far away although the displacement of very distant objects are not “grabbed” by the camera). In case of near-static scenes it’s beneficial to use the spatial frame packing, the time-interleaving packing is not a good choice, since ref_idx=0 is not chosen and signaling of ref_idx>0 takes more bits. On the other hand, in case of the significant motion, parallax displacements are significantly smaller than temporal ones and consequently the time-interleaving frame packing is expected to be beneficial than the spatial one. It’s an awkward solution to change frame packing according to the scene status (“static” or “motion”).

The only solution i can see is to use time-interleaving frame packing and to enable the reference frame reordering mechanism. In case of static scenes an encoder reorders the reference list such that the same-view references are the first, otherwise the reference list is ordered naturally, according to POC.

23+ years’ programming and theoretical experience in the computer science fields such as video compression, media streaming and artificial intelligence (co-author of several papers and patents).

the author is looking for new job, my resume

Very well written post. It will be valuable to everyone who employess it, as well as myself. Keep doing what you are doing – for sure i will check out more posts.

Great post, I believe blog owners should acquire a lot from this web site its really user pleasant.

I gotta favorite this web site it seems very helpful invaluable

This is a topic close to my heart cheers, where are your contact details though?

slavah264@gmail.com

I’ve learn several excellent stuff here. Certainly worth bookmarking for revisiting. I surprise how so much attempt you set to create this sort of magnificent informative web site.

This is really interesting, You’re a very skilled blogger. I’ve joined your feed and look forward to seeking more of your excellent post. Also, I’ve shared your site in my social networks!

Hello very nice website!! Guy .. Beautiful .. Wonderful .. I will bookmark your web site and take the feeds additionallyKI am satisfied to seek out so many useful info here in the publish, we’d like develop extra techniques in this regard, thanks for sharing. . . . . .

Glad to be one of many visitants on this awful website : D.

Sweet blog! I found it while browsing on Yahoo News. Do you have any tips on how to get listed in Yahoo News? I’ve been trying for a while but I never seem to get there! Appreciate it

I would like to thnkx for the efforts you have put in writing this blog. I am hoping the same high-grade blog post from you in the upcoming as well. In fact your creative writing abilities has inspired me to get my own blog now. Really the blogging is spreading its wings quickly. Your write up is a good example of it.

Very interesting topic, appreciate it for posting.

I haven’t checked in here for a while as I thought it was getting boring, but the last few posts are great quality so I guess I will add you back to my everyday bloglist. You deserve it my friend 🙂