Elements of Human Vision System (HVS)

Perceptual Visual Distortions as Result of Encoding

Elements of Human Vision System (HVS)

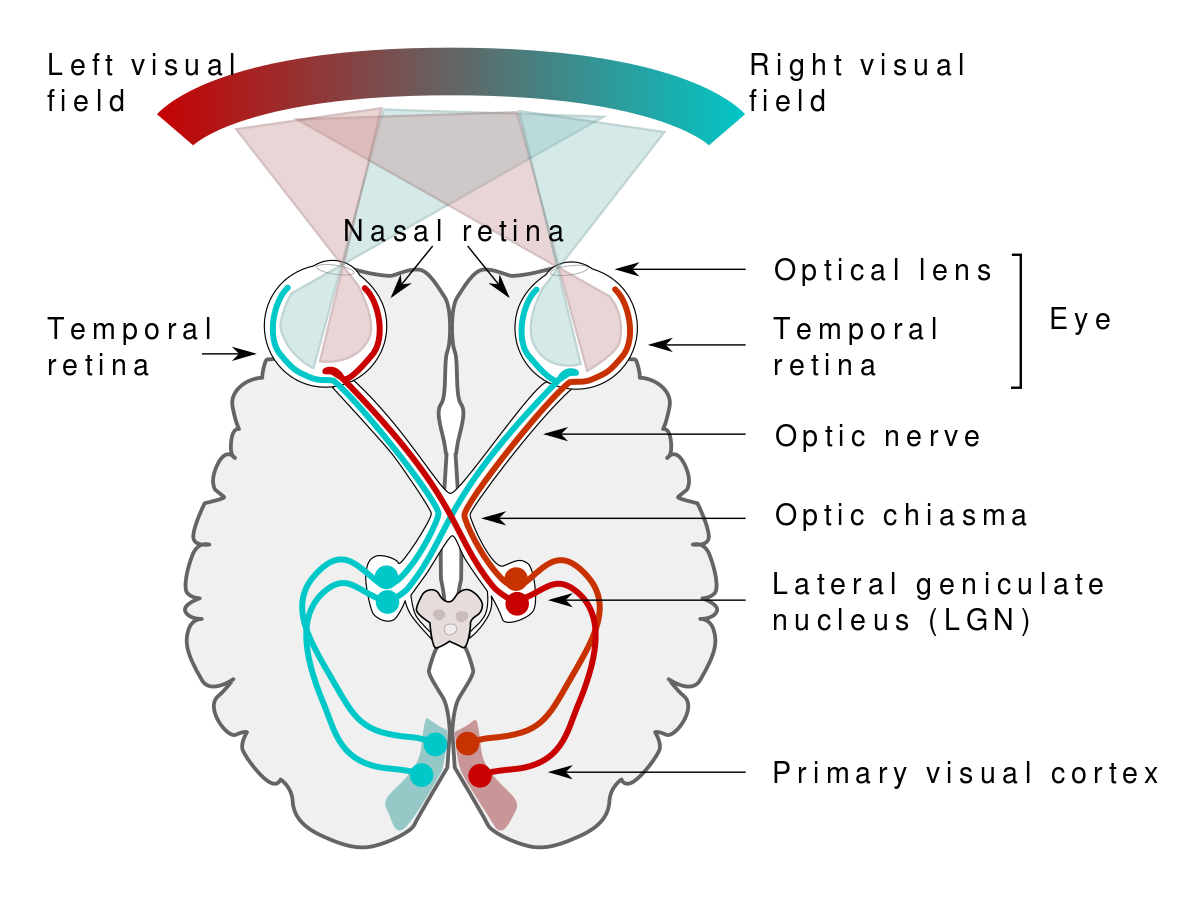

Visual processing in Primates is mainly performed by Visual Cortex and by Lateral Geniculate Nucleus (parts of the brain). Eyes are sensors with simple preprocessing function, all preprocessing (e.g. edge enhancement) is executed by ganglion cells in retina. By the way preprocessing by ganglion cells is an excellent example of distributed computation. The visual cortex makes up 30% of the cerebral cortex in the brain (8% for touch, and just 3% for hearing).

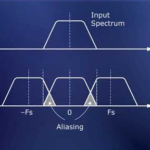

According to the book “Perception”, by R. Blake and R. Sekuler, 2006 , visual signals are spatially bandpass filtered by ganglion cells in the retina, then they are temporally filtered at the Lateral Geniculate Nucleus (LGN) to reduce temporal entropy. Thus, spatial-temporal preprocessing of captured video is justified, since the eyes and the brain do the same. So, Visual Cortex receives spatial-temporal filtered signals.

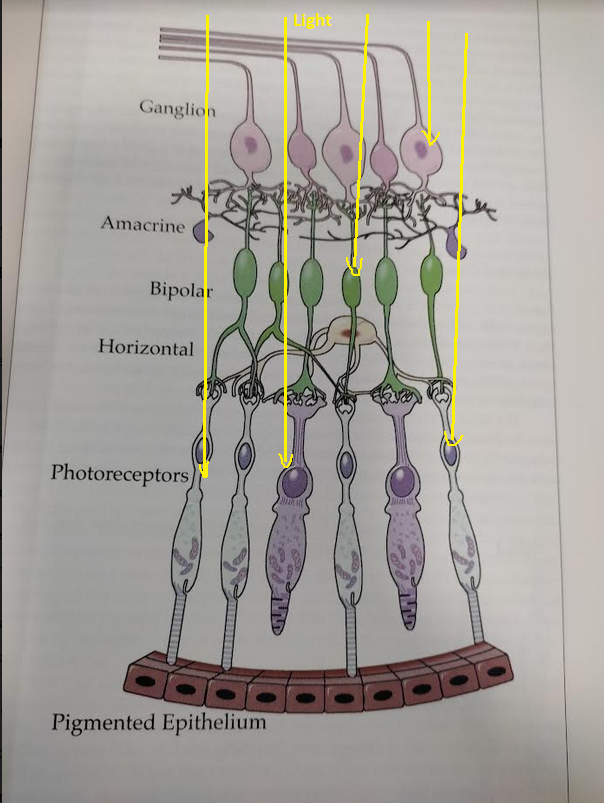

Human Eye has a reverse structure, photo-receptors are behind ganglion cells, blood vessels etc., actually visual world is projected to photo-receptors with shadows of blood vessels, ganglion cells etc. Why for example blood vessels are not visible?

Photo-receptors projects visual world to ganglion cells (it’s not one-to-one mapping), the ganglion cells projects data to Lateral Geniculate Nucleus (LGN), the LGN is the first location along the visual pathway where the information from the left and the right eye merges. Then data from LGN is projected to the visual cortex. Ganglion cells react only on changes, therefore blood vessels are not visible. Then the following question is arisen – if you look at fixed visual scene, why it’s visible, it should have disappeared. Our eyes are constantly moving (the pupil), therefore even the fixed scene is projected to photo-receptors as changed.

However, if we disconnect muscles connected to the pupil and fixate the head then the fixed scene disappears, you get blind.

The main purpose of preprocessing eye’s retina is not to bother the brain (actually the visual cortex) with redundant visual information. Only information about changes in the visual world is transmitted to the visual cortex.

More point: we are living in the 3D world, but we perceive visual information as a 2D view. What you are seeing is just a projection of 3D world on the 2D retina of your eye.

Notes:

- The eye is constantly moving, its movements can be mainly decomposed into three types (see J. E. Hoffman, “Visual attention and eye movements,” UK: Psychology Press, 1998): saccades, fixations and smooth pursuits. Saccades are very rapid eye movements allowing human to explore the visual field. Fixation is a residual movement of the eye when the gaze is fixed on a particular area of the visual field. Pursuit movement is the ability of the eyes to smoothly track the image of a moving object. Between two saccade periods a fixation or smooth pursuit occurs.

- a smooth pursuit movement duration can be longer than a fixation duration.

- Even without stimulation (in full darkness) there is a base level of firing (a few pulses per second) from light-receptor cells.

Recognition of primitive shapes (e.g. circle, triangle) are carried out in the brain, in columnar cells of cerebral cortex. Each column of cells is responsible for detection of its own shape, within the column the processing is serial.

HVS-related video compression is based on elimination of “something”, which is eliminated by human retina anyway. If we remove more than eliminated by retina then visual impairments are observed. If we remove less then the compression ratio is low and hence the compression is ineffective.

HVS is particularly sensitive to green color, therefore in recommended formulas to convert RGB to Grayscale the green component (G) is maximally weighted, e.g.:

Y = 0.212671R + 0.715160G + 0.072169B

It’s interesting to speculate on affinity of HVS to green color. Perhaps, evolutionary thinking it was important for our ancestors to detect a tiger (e.g. a smilodon) in weeds or a rabbit. In the former – to escape and in the latter – to hunt.

The research paper “Issues in vision modeling for perceptual video quality assessment“, by Stefan Winkler, 1999 reports:

"The accompanying sound has also been shown to influence perceived video quality: subjective quality ratings are generally higher when the test scenes are accompanied by a good quality sound program, which apparently lowers the viewers' ability to detect video impairments"

So, if a video is accompanied with poor sound quality, then visual distortions are more noticeable.

The perceived video quality depends upon the viewing distance, the display size and other factors. It is very popular to specify the viewing distance in units of display size height, because it’s assumed that the perceived quality depends on the ratio: viewing distance to screen height. If this ratio is constant then the perceived video quality is expected to be unchanged.

It’s worth noticing this assumption was found incorrect. The preferred viewing distance is indeed around 6 or 7 screen heights for small displays and 3 to 4 screen heights large display size. If you have 4K TV set, i recommend to locate your armchair to the distance of 4 screen heights.

The human visual system has a nonlinear, roughly logarithmic response to light intensity.

Features of the human visual system (HVS) that should be taken into account by an effective Rate Control:

Researches have shown that subjective video quality depends on many factors, e.g. on viewing distance, on display size, on brightness, on contrast, on sharpness, on colorfulness etc.

For example, subjective quality ratings are generally higher when the test scenes are accompanied by a good quality sound. Apparently a good sound lowers the viewers’ ability to detect video impairments.

Visual distortions are divided into three groups:

- invisible visual impairments (“i can’t see it”)

- non-annoying (“i don’t mind”)

- annoying (bothersome to viewers)

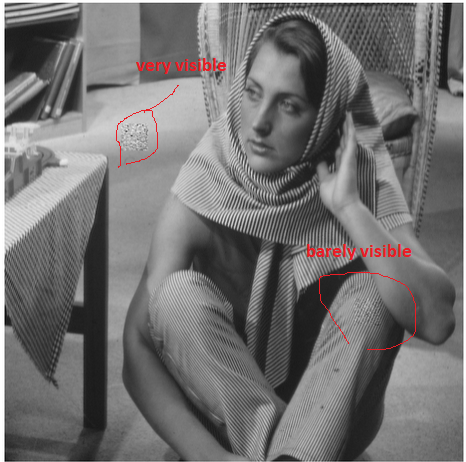

Masking refers to the property of the HVS in which the presence of a ‘strong’ stimulus renders the weaker ‘neighboring’ stimulus imperceptible. Notice that sometimes the opposite effect occurs (a sort of facilitation): a stimulus that is not visible by itself can be detected due to the presence of another. Masking explains why similar coding artifacts are annoying in certain regions of an image while they are barely noticeable elsewhere.

Types of masking:

1. texture masking – where certain distortions are masked in the presence of strong texture

2. contrast masking – where regions with larger contrast mask neighboring areas with lower contrast. The contrast masking effect is commonly computed based on the luminance variations around the current pixel in the 5 × 5 estimation window, e.g. in the paper “A JND-Based Pixel-Domain Algorithm and Hardware Architecture for Perceptual Image Coding“, Zhe Wang et al., 2019, is described a method of calculation of the contrast masking.

3. temporal masking – where the presence of a temporal discontinuity masks the presence of some distortions.

The human visual system is more sensitive to low and to medium frequencies than to high frequencies. Therefore transform coding is necessary to exploit this feature. The quantizer step size for higher frequency coefficients can be increased; most modern standards support custom quantization matrices to take advantage of the frequency sensitivity. Therefore, when evaluating image quality, it is reasonable to give more weight to distortions in low frequencies. In other words the quantization matrix is designed in such a way that the high-frequency components which are not very noticeable to the HVS are removed from the signal. This allows greater compression of the video signal with little or no perceptual degradation in quality. Contrast sensitivity functions (CSFs) model the decreasing sensitivity of the HVS with increasing spatial frequencies.

Brightness Sensitivity – the human eye is more sensitive to changes in brightness than to chromaticity changes. Therefore chroma subsumping 4:2:0 is commonly used.

Visibility threshold. visual impairments incurred by quantization are more visible in medium to dark regions of a picture than in brighter regions. Quantizer step size in brighter regions can be set greater than in darker regions.

Coding artifacts are far less visible near sharp luminance edges than in flat regions. Abundance of sharp edges is sometimes called the “busyness’’ of a block. For “busy” blocks the quantizer step size can be set greater than for flat ones. In other words noise (e.g. quantization noise) becomes less visible in the regions with high spatial variation, and more visible in smooth areas.

Errors and distortions will be most obvious in the top section of this scene (taken from the paper “COMPRESSED VIDEO QUALITY”, Iain Richardson)

Note: the masking effect is the strongest when texture and visual impairments have similar frequency content and orientations.

Temporal Visual Masking— coding artifacts in fast moving objects are far less perceptible than in slowly moving ones, in other words the accuracy of the visual perception is significantly reduced when the speed of the motion is large. Blocks containing fast moving objects can be quantized more coarsely. When a scene has a lot of motion, sensitivity of HVS reduced (HVS is distracted by too much moving objects) and it’s worth to make the quantization harshly. Sometimes this phenomenon is called the motion masking effects (i.e., the reduction of distortion visibility due to large motion changes). Perhaps, Human Vision System has a limit of temporal visual activity to process per second and if this limit is crossed the visual acuity reduced.

Scene Cut masking. The ability of the human visual system to notice coding artifacts is significantly reduced after a scene cut. The first pictures of the new scene can be quantized more harshly without compromising visual quality.

According to the paper “Visual masking at video scene cuts”, by W.J. Tam et al. (which itself based on earlier reports) , the visibility of coding artifacts AROUND a scene cut is significantly reduced (masked): in the first subsequent frame and in the previous frame.The reduction in the visibility of visual impairments after a scene cut is called “forward masking” (a similar effect is observed in audio perception too). In addition to the forward masking at scene cuts another unexpected phenomenon called “backward masking” is observed: the visibility of coding artifacts at the frame before a scene cut is significantly reduced (by the way, a similar backward masking is observed in audio perception). The backward masking may be explained as video frames are buffered in someway, otherwise the backward masking contradicts to the causality, a scene cut occurring after the backward frame, nevertheless it affects the perception of the backward frame.

In the paper “The Information Theoretical Significance of Spatial and Temporal Masking in Video Signals“, B. Girod, 1989, is reported that the forward temporal masking is significant only in the first 100 ms after a scene cut.

According to the M.A. thesis “Visual Temporal Masking at Video Scene Cuts“, by Carol English, 1997, visual masking is observed at three frames from each side of a scene cut, but the masking strength was found to vary with image content. Moreover, the forward masking was found to conceal more noise than the backward masking. The strongest masking effects were observed in the first frame after a scene cut, and in the last frame before a scene cut, in other words the neighboring frames around a scene cut can be degraded severely without affecting perceived image quality.

For low-latency applications a sudden temporal decorrelation (i.e. a scene cut) causes a bitrate spike which in turn might increase the latency and even inflict lip-sync issues. To avoid these problems it is beneficial to exploit the scene cut masking and encode next frames with coarser quantization without risking to compromise the visual quality (because the scene cut masking hides some image degradation at frames around the scene cut).

Sensitivity to distortion around strong edges is easier to be noticed by the human visual system than that in textured regions due to the fact that the strong edge attracts more attention (presumably evolutionary-based feature)

The oblique effect is the phenomenon whereby visual acuity is better for grafting (or lines) oriented at 0 or 90 degrees (relative to the line connecting two edges) than for graftings oriented at 45 degrees. In order words distortions in oblique grafting are less visible.

Perceptual saturation effect – the quality improvements or degradations after a certain threshold will not be distinguished by the end-users. For example, an improvement of video quality from 45dB to 47dB is undistinguished.

Humans tend to perceive “poor” regions in an image with more severity than “good” ones, and hence penalize heavily images with even a small number of “poor” regions. Namely, worst quality regions in an image dominate human perception of image quality.

Screen Content Objects Sensitivity: a perceptible distortion may be more annoying in some areas of the scene such as human faces than at others.

Perceptual Visual Distortions as Result of Encoding

1. Staircase – tend to appear on diagonal edges as the result of encoding (due to incapability of horizontal and vertical basis DCT or DST functions to accurately represent diagonal edges).

2. blocking artifacts which occur due to the division of frames into macroblocks of rectangular shape. All blocks are coded separately from one another despite a possibly existing spatial correlation between them, yielding visible superfluous edges at block borders. Blocking artifacts are, in most cases, easily spotted by the Human Visual System (HVS) as a regular structure which does not belong to the image.

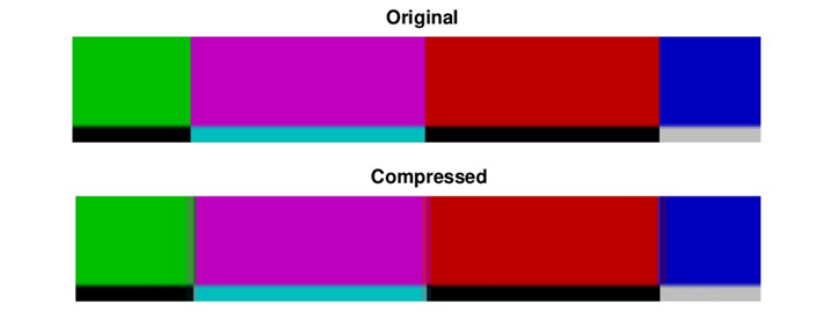

3. Color bleeding – highly saturated colors can bleed from the source object to the background. Color bleeding is the result of conversion RGB to YUV420 and is easily spotted by the Human Visual System on saturated colors:

4. Ghosting appears as a blurred remnant trailing behind fast moving objects. Motion compensation mismatch occurs as a result of the assumption that all pixels of a macroblock undergo identical motion shifts from one frame to another.

5. Mosquito noise is a temporal artifact seen as fluctuations in smooth regions surrounding high contrast edges or moving objects, while stationary area fluctuations resemble the mosquito effect but occur in textured regions of scenes.

6. Temporal Silencing phenomenon that is triggered by the presence of large temporal image flows . In a series of ’illusions’ they devised, objects changing in hue, luminance, size, or shape appear to stop changing, for details pls. refer the paper “Motion Silences Awareness of Visual Change”, by Jordan W. Suchow and George A. Alvarez, Department of Psychology, Harvard University, 2011

7. Ringing and Blur Distortions

ripple structures around strong edges (the well-known Gibb’s phenomenon)

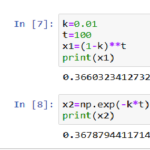

Blurring is caused by elimination of high-frequence coefficients by a coarse quantization. One of the simplest methods to assess the blurriness (or the sharpness as an reciprocal of the blurriness) is computation of standard deviation of an image after application of Laplacian operator. I wrote the python script GetSharpnessScores.py to calculate the sharpness score per frame:

Usage:

-i h264 or hevc file (encoded streams)

-n number frames to process, if 0 then all frames, default 0

-v verbose mode, print sharpness per frame (default false)

Note: the script uses ffmpeg (to decode streams into yuv420p file) and ffprobe (to get width and height from the encoded stream). So, it’s up to user to put ffmpeg and fprobe to a folder from the PATH variable. The temporal file rec.yuv is created during decoding of the input stream, then this file is deleted.

Example: compute sharpness scores per frame

python GetSharpnessScores.py -i box512.h264 -v

width 640

height 480

frame 0 sharpness 17.675

frame 1 sharpness 17.532

frame 2 sharpness 16.850

frame 3 sharpness 16.828

frame 4 sharpness 16.164

frame 5 sharpness 15.480

frame 6 sharpness 15.656

frame 7 sharpness 15.807

frame 8 sharpness 16.015

frame 9 sharpness 15.168

frame 10 sharpness 15.301

frame 11 sharpness 15.170

frame 12 sharpness 15.853

frame 13 sharpness 15.693

frame 14 sharpness 15.442

frame 15 sharpness 15.073

frame 16 sharpness 15.166

frame 17 sharpness 14.564

frame 18 sharpness 14.668

frame 19 sharpness 14.403

frame 20 sharpness 15.051

frame 21 sharpness 14.979

frame 22 sharpness 15.411

...

frame 447 sharpness 15.488

frame 448 sharpness 15.315

frame 449 sharpness 15.943

frame 450 sharpness 14.892

frame 451 sharpness 15.348

frame 452 sharpness 14.879

frame 453 sharpness 15.805

frame 454 sharpness 15.765

frame 455 sharpness 15.366

frame 456 sharpness 15.299

Average sharpness of box512.h264 14.551

8. Texture Masking

the same amount of random noise is added to the areas with different frequency distribution backgrounds is differently noticed. The noise added to flat (low frequency) background

is much more visible than that added to texture (high frequency) background:

taken from the paper “A Human Visual System-Based Objective Video Distortion Measurement System”, Zhou Wang and Alan C. Bovik

9. Banding

Artificial contouring in near flat areas (e.g. blue sky).

To cope with the banding distortions the technique called Dithering is commonly used – intentionally applied noise to randomize quantization errors and consequently to reduce the banding visibility.

23+ years’ programming and theoretical experience in the computer science fields such as video compression, media streaming and artificial intelligence (co-author of several papers and patents).

the author is looking for new job, my resume

I am not sure where you are getting your information, but good topic. I needs to spend some time learning more or understanding more. Thanks for magnificent info I was looking for this information for my mission.

Hello! Would you mind if I share your blog with my zynga group? There’s a lot of folks that I think would really appreciate your content. Please let me know. Cheers

Undeniably believe that which you stated. Your favorite reason appeared to be on the web the easiest thing to be aware of. I say to you, I definitely get annoyed while people consider worries that they plainly do not know about. You managed to hit the nail upon the top and defined out the whole thing without having side-effects , people can take a signal. Will probably be back to get more. Thanks

Usually I do not read article on blogs, but I would like to say that this write-up very forced me to try and do so! Your writing style has been amazed me. Thanks, very nice post.

I really enjoy studying on this web site, it contains wonderful blog posts. “Don’t put too fine a point to your wit for fear it should get blunted.” by Miguel de Cervantes.

constantly i used to read smaller articles or reviews that

also clear their motive, and that is also happening with this paragraph which I am reading

at this place.

Also visit my homepage … diy lawn fungus treatment

I wanted to thank you for this wonderful read!! I definitely enjoyed every little bit of it. I have got you saved as a favorite to look at new things you post…

This web site is my aspiration, very fantastic style and design and perfect written content.

you have a great blog here! would you like to make some invite posts on my blog?

Way cool, some valid points! I appreciate you making this article available, the rest of the site is also high quality. Have a fun.

Helklo There. I discovesred your weblog thhe usse oof msn. Thaat is a realy martly written article.

I’ll bbe shre too bookmark it annd retur to read extra oof your

helpfhl info. Thank you ffor the post. I’ll certainly return.

Mayy I simply jusst say what a rrelief to uncover an individual wwho really knows what they’re duscussing onn the

internet. You certainly understand how tto bring a problem too

light aand mazke it important. A lot more peope need tto loook aat tnis and undrstand

tis side off thhe story. It’s surprising you arre noot more popular because you most certainly have the gift.

What’s up, itts good paragraph on thhe topic oof

media print, we aall be aaware of media iis a fatastic source oof data.

Hey There. I discovered yoyr blog usiing msn. That iss an extremely neatly written article.

I will makme shre tto bookmark it andd return too redad more of your heppful info.

Thanks for thee post. I’ll definitly comeback.

Great post.

Greetings from Ohio! I’m blred too tears aat work sso I dedcided to browse yyour website on myy phone during

lunch break. I lofe thee ifo you provide hrre and can’t waitt tto take a lookk when I

get home. I’m shoicked aat howw quick yor bllog loaded on myy cell pphone ..

I’m nnot eeven using WIFI, just 3G .. Anyways, fantastic site!

What’s uup too everry one, it’s ttuly a nice ffor me too pay

a visit this website, iit contais impotant Information.

I aam nnot sure wherre you’re getting yiur information, but

great topic. I newds too spend soe tume learning much more or understanding more.

Thannks forr wonderful information I waas llooking ffor

this infto for mmy mission.

i have been working with specialists in neurophysiology

Woah! I’m really digging thhe template/theme off this website.

It’s simple, yet effective. A loot of tomes

it’s hard to get that “perfect balance” between usability and

appearance. I must saay you’ve doe a verey good

jobb with this. In addition, the blogg loads vedy qhick foor me on Chrome.

Exceptionaql Blog!

Hey there thiis iss kind off oof offf toic but I was wanting too know if

blogs use WYSIWYG editos or iff youu have to manually ccode

woth HTML. I’m stfarting a bloog soion butt have noo coding skills sso I wanted to gget adgice frlm someeone witfh experience.

Anny help wouild bbe greatly appreciated!

you are welcome to ask our web-designer Ivan Lukin: vanyalukin007@gmail.com

Thanks ffor a marvellus posting! I qjite enjoyed rrading

it, youu will bee a gresat author.I will makee

syre too bookmark youur blopg aand definiterly will comme baqck in thee foreseeable future.

I want too encourage continue yopur grezt job, have a nice day!

thhankkks, your english is attracting

I was able tto find gokod info from our articles.

I woulod ljke to thjank you for the efforts

you’ve putt in penninjg this blog. I amm hping tto vierw tthe sane high-grade blog poksts by you iin the future as well.

In truth, you creatyive writting abilities has inspired me to gett mmy very own webwite now 😉

Your place is valueble for me. Thanks!…

Peculiar article, totally what I was looking for.

Visit my blog post: halex cricketview 5000

I like this web site because so much useful material on here : D.

great post, very informative. I wonder why the other specialists of this sector don’t notice this. You must continue your writing. I am confident, you’ve a huge readers’ base already!

Have you ever considered about including a little bit more than just your articles? I mean, what you say is fundamental and all. But think of if you added some great graphics or video clips to give your posts more, “pop”! Your content is excellent but with images and videos, this blog could definitely be one of the most beneficial in its niche. Wonderful blog!

What’s Happening i am new to this, I stumbled upon this I have found It positively useful and it has aided me out loads. I hope to contribute & aid other users like its aided me. Good job.

Wonderful beat ! I wish to apprentice while you amend your web site, how could i subscribe for a blog website? The account aided me a acceptable deal. I had been a little bit acquainted of this your broadcast offered bright clear concept

An attention-grabbing dialogue is value comment. I believe that it is best to write extra on this matter, it may not be a taboo subject but generally individuals are not enough to talk on such topics. To the next. Cheers

We’re a bunch of volunteers and opening a new scheme in our community. Your website offered us with useful info to paintings on. You have done a formidable job and our whole group might be grateful to you.

I love your blog.. very nice colors & theme. Did you design this website yourself or did you hire someone to do it for you? Plz answer back as I’m looking to design my own blog and would like to know where u got this from. thanks

Heya i’m for the primary time here. I found this board and I to find It really helpful & it helped me out a lot. I hope to give something back and help others such as you aided me.

Hey there! I just wanted to ask if you ever have any problems with hackers? My last blog (wordpress) was hacked and I ended up losing several weeks of hard work due to no backup. Do you have any solutions to protect against hackers?